Ethical Concerns Around Generative AI: Deepfakes, Bias, and Misinformation

The rapid ascent of generative artificial intelligence (AI) has sparked a revolution across industries, promising unprecedented advancements in creativity, efficiency, and problem-solving. From generating human-like text and stunning visual art to synthesizing realistic voices and entire video sequences, systems like ChatGPT, DALL-E, and Midjourney have captivated the public imagination. Yet, beneath this veneer of innovation lies a complex web of profound ethical concerns that threaten to reshape our understanding of truth, trust, and societal equity. Chief among these are the proliferation of deepfakes, the ingrained problem of algorithmic bias, and the potential for a catastrophic deluge of misinformation. Addressing these challenges is not merely an academic exercise but an urgent imperative for safeguarding democratic processes, upholding human dignity, and preserving the integrity of information in an increasingly AI-driven world.

The Dawn of Generative AI: A Double-Edged Sword

Generative AI refers to a class of artificial intelligence algorithms that can produce new content, rather than simply analyzing or classifying existing data. Unlike traditional AI that might perform tasks like image recognition or language translation, generative models learn patterns and structures from vast datasets (text, images, audio, video) and then use this knowledge to create original, realistic outputs that were not present in their training data. This capability has opened doors to remarkable applications: artists can create unique designs, developers can generate code snippets, writers can draft content, and scientists can simulate complex biological processes. The potential for enhancing human creativity and productivity is immense, promising to automate mundane tasks, accelerate discovery, and democratize access to sophisticated content creation tools.

However, this very power to generate realistic new content is precisely what forms the basis of its most pressing ethical dilemmas. The ability to synthesize sounds, images, and videos indistinguishable from reality, or to produce convincing narratives at scale, carries an inherent risk of misuse. As these technologies become more accessible and sophisticated, the line between authentic and artificial blurs, challenging the foundational principles of trust and truth upon which societies operate.

The Menace of Deepfakes: Eroding Trust and Reality

Deepfakes are synthetic media in which a person in an existing image or video is replaced with someone else’s likeness using AI techniques. While early deepfakes might have been crude, modern generative adversarial networks (GANs) and diffusion models can produce highly convincing, near-undetectable fakes of faces, voices, and even entire body movements. The implications of this capability are chilling and far-reaching, striking at the heart of our ability to discern reality.

Political and Social Manipulation

One of the most insidious applications of deepfakes lies in their potential for political and social manipulation. Fabricated videos of politicians making inflammatory statements, audio recordings of world leaders issuing false commands, or images depicting individuals in compromising situations could be weaponized to sway public opinion, discredit opponents, or sow discord. In an election cycle, a well-timed deepfake could irrevocably damage a candidate’s reputation, ignite social unrest, or even incite violence, with the truth often unable to catch up to the speed of the fabricated narrative. The “proof” offered by a compelling video or audio clip can override rational thought, leading to widespread belief in falsehoods.

Financial Fraud and Impersonation

Beyond politics, deepfakes pose significant threats to personal and corporate security. Voice cloning, for example, has already been used in sophisticated scams where fraudsters impersonate executives or family members to authorize fraudulent transfers or extract sensitive information. Imagine a deepfake video call mimicking a CEO, demanding an urgent wire transfer, or a loved one’s voice requesting emergency funds. As biometric authentication becomes more common, the ability of AI to spoof facial or vocal identifiers could lead to a surge in identity theft and financial fraud, undermining the very security measures designed to protect us.

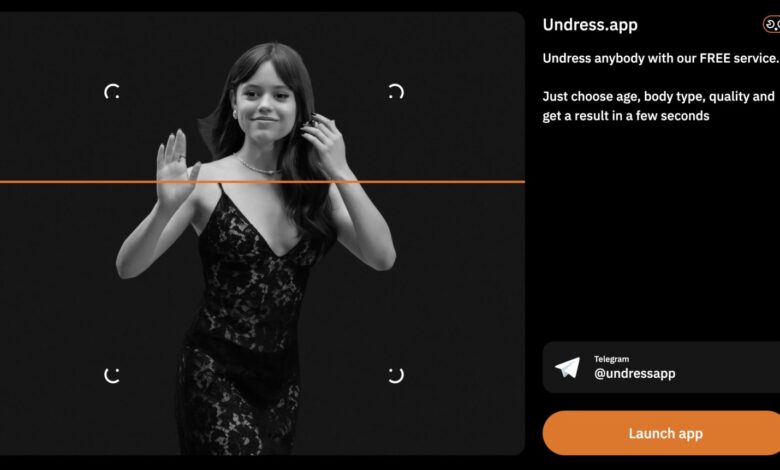

Non-Consensual Intimate Imagery (NCII)

Perhaps the most personally devastating application of deepfake technology is its use in creating non-consensual intimate imagery (NCII), often referred to as “revenge pornography.” Individuals, predominantly women, have had their faces superimposed onto explicit content without their consent, leading to severe psychological distress, reputational damage, and even professional ruin. This form of digital sexual assault is a profound violation of privacy and dignity, weaponizing advanced technology to inflict harm on an unprecedented scale. Despite legal efforts to ban such content, its ease of creation and distribution presents an ongoing struggle for victims seeking recourse.

The “Liar’s Dividend”: Undermining Credibility

Beyond active misuse, the very existence of deepfake technology introduces a concept known as the “liar’s dividend.” This refers to the phenomenon where individuals or entities, when confronted with credible evidence of wrongdoing (e.g., an authentic video), can simply claim it’s a deepfake. This allows them to deny accountability and casts a shadow of doubt over all visual and audio evidence, even genuine ones. In an era where “seeing is believing,” the liar’s dividend erodes the fundamental trust in recorded reality, making it harder to establish facts in legal proceedings, journalistic investigations, or public discourse, ultimately threatening the integrity of truth itself.

Algorithmic Bias: Perpetuating and Amplifying Societal Inequities

While deepfakes manipulate reality, algorithmic bias distorts fairness. Generative AI models, like all AI systems, are only as good as the data they are trained on. If this vast training data reflects existing societal biases, historical discrimination, or imbalanced representation, the AI will learn and perpetuate these biases, often amplifying them in its outputs. This is not a malicious act by the AI itself, but a reflection of systemic issues embedded in the data collected from the real world.

Data Bias: The Root Cause

The primary source of algorithmic bias is biased training data. If a dataset primarily features images of light-skinned individuals in professional roles and darker-skinned individuals in low-wage or criminal contexts, the AI will learn to associate these patterns. Similarly, if language models are trained on internet text that contains gender stereotypes, they will reproduce those stereotypes when generating new content. This can manifest in several ways:

- Underrepresentation: Lack of sufficient data for certain demographic groups (e.g., facial recognition systems that perform poorly on darker skin tones or women).

- Historical Bias: Data reflecting past societal inequalities (e.g., historical hiring data that favored men for certain roles).

- Confirmation Bias: Data labeling processes that reinforce human prejudices.

Representational Harms

Generative AI can perpetuate representational harms by creating content that reinforces harmful stereotypes or excludes certain groups. For example, when asked to generate images of “CEOs,” an AI might predominantly produce images of white men, erasing the diversity that exists in the real world. Asking for “doctors” might yield mostly male images, while “nurses” might yield mostly female, reflecting and entrenching traditional gender roles. Similarly, language models might generate text that uses biased language or links certain racial or ethnic groups to negative attributes. These representational biases can shape public perception, influence societal norms, and contribute to the marginalization of underrepresented groups.

Allocative Harms

More critically, bias in generative AI can lead to allocative harms, where opportunities, resources, or information are unfairly distributed or withheld. While generative AI is not typically used for direct allocation (like a loan approval system), its outputs can influence decisions made by humans or other AI systems. For instance, if an AI is used to generate candidate profiles for recruitment and its output reflects racial or gender bias, it could inadvertently disadvantage qualified individuals. In content moderation, a biased generative AI could flag legitimate content from certain communities while missing problematic content from others. The subtle but pervasive influence of biased AI-generated content can contribute to systemic discrimination in areas like employment, education, credit, and even access to justice.

Generative Bias Amplification

A particularly concerning aspect is how generative models can amplify existing biases. Because these models are designed to be creative and extrapolate from their data, they don’t just reproduce biases; they can extrapolate and exaggerate them. If a model sees a slight tendency in its data for a certain group to be associated with a particular characteristic, it might amplify this tendency in its generated output, making the stereotype even more prominent or entrenched. This creates a feedback loop where societal biases are not only reflected but also intensified and disseminated by AI, making them harder to dismantle.

The Deluge of Misinformation: A Threat to Truth and Democracy

The age of misinformation is not new, but generative AI has supercharged its creation and dissemination to an unprecedented degree. What once required significant human effort to craft convincing fake news articles or propagate conspiracy theories can now be done instantly, at scale, and with alarming realism. This poses an existential threat to public discourse, democratic processes, and the very concept of shared truth.

Scale and Speed of Propagation

Generative AI enables the creation of vast quantities of highly persuasive content in minutes – from fake news articles, social media posts, and comments to fabricated reviews and deepfake videos designed to look like legitimate news reports. This unprecedented scale means that misinformation can flood information ecosystems, overwhelming the capacity of fact-checkers, journalists, and platforms to identify and debunk it. Furthermore, AI can optimize the spread of this content, tailoring narratives to specific audiences and exploiting emotional vulnerabilities, leading to rapid and widespread dissemination across social media and other digital channels.

Sophistication of False Content

Beyond sheer volume, the quality of AI-generated misinformation is a major concern. It’s no longer just poorly written, easily identifiable propaganda. Generative AI can produce:

- Hyper-realistic Text: Convincing news articles, scientific papers (with fabricated citations), social media campaigns, and even entire fictional narratives designed to support a specific agenda.

- Authentic-looking Images and Videos: Creating visual “evidence” for false claims, from staged events to doctored historical records.

- Personalized Narratives: AI can tailor disinformation campaigns to specific individuals or groups, making the content more resonant and harder to dismiss. This might involve generating fake comments from “neighbors” or “experts” tailored to a user’s known interests or demographics.

This sophistication makes it incredibly difficult for the average person to distinguish between genuine and fabricated content, even for savvy users.

Erosion of Public Trust

The constant exposure to hyper-realistic fake content, coupled with the “liar’s dividend” discussed earlier, cultivates an environment of profound distrust. When every image, video, or news report can be dismissed as potentially fake, public trust in institutions – the media, government, science, and even each other – erodes. This generalized skepticism creates fertile ground for conspiracy theories to flourish and makes it challenging to mobilize public support around critical issues like public health crises, climate change, or democratic processes. A society that cannot agree on a common set of facts is a society vulnerable to manipulation and fragmentation.

Political Polarization and Social Instability

Misinformation fueled by generative AI can exacerbate political polarization, deepen societal divides, and even incite social instability. By crafting narratives that demonize opposing viewpoints or reinforce extreme ideologies, AI can push individuals further into echo chambers, making constructive dialogue nearly impossible. During times of crisis, AI-generated false alarms or rumors could lead to panic, unrest, or even violence. The ability to create synthetic “experts” or “witnesses” to bolster false claims adds a layer of credibility that is profoundly dangerous for civic discourse and social cohesion.

Towards Responsible Innovation: Mitigating the Risks

Addressing the multi-faceted ethical challenges posed by generative AI requires a comprehensive, multi-stakeholder approach involving technologists, policymakers, educators, and the public. There is no single silver bullet, but rather a combination of technical, regulatory, and societal solutions.

Technical Solutions and Safeguards

Technological countermeasures are crucial for identifying and deterring misuse. This includes:

- Digital Watermarking and Provenance: Developing methods to digitally mark AI-generated content (e.g., C2PA standard) and track its origin, making it easier to verify authenticity.

- Detection Tools: While deepfake and AI-generated text detection tools are in an arms race with generative capabilities, continued research into robust and reliable detection methods is vital.

- Model Limitations and Guardrails: AI developers must build in safeguards at the model-level, preventing the generation of harmful content (e.g., explicit imagery, hate speech, or politically manipulative deepfakes) and implementing ethical use policies.

- Transparency and Explainability: Making AI systems more transparent about how they work and why they produce certain outputs can help in identifying and mitigating bias.

Regulation and Policy Frameworks

Governments and international bodies play a critical role in establishing clear legal and ethical frameworks for generative AI:

- AI-Specific Legislation: Laws like the EU AI Act aim to classify AI systems by risk level and impose stricter requirements for high-risk applications, including those with generative capabilities.

- Liability and Accountability: Establishing clear lines of liability for the creation and dissemination of harmful AI-generated content (e.g., for platforms, developers, or users).

- Bans on Malicious Use Cases: Outright banning highly damaging applications, such as non-consensual deepfake pornography.

- Ethical Guidelines and Standards: Developing sector-specific ethical guidelines for the responsible development and deployment of generative AI.

Media Literacy and Public Education

Empowering the public to critically evaluate information is perhaps the most powerful long-term solution:

- Digital Literacy Campaigns: Educating citizens on how to identify synthetic media, recognize common disinformation tactics, and verify sources.

- Critical Thinking Skills: Fostering abilities to question, analyze, and seek out diverse perspectives.

- Support for Independent Journalism and Fact-Checking: Strengthening the institutions dedicated to uncovering truth and debunking falsehoods.

Ethical AI Development and Governance

The responsibility ultimately lies with those developing and deploying these powerful tools:

- Bias Audits and Mitigation: Proactively auditing training data and model outputs for biases and implementing strategies to mitigate them throughout the AI lifecycle.

- Diverse Development Teams: Ensuring that AI developers reflect a broad range of backgrounds to bring different perspectives to identifying and addressing potential harms.

- Red Teaming and Stress Testing: Actively attempting to find vulnerabilities and potential for misuse in AI models before deployment.

- Responsible AI Principles: Integrating ethical considerations into every stage of AI design, development, and deployment, prioritizing fairness, transparency, and human well-being.

International Cooperation and Multi-Stakeholder Dialogues

Given the global nature of AI and information flow, international cooperation is essential. Governments, tech companies, civil society organizations, and academic institutions must collaborate on shared standards, research, and enforcement mechanisms to address these global challenges effectively.

Conclusion

Generative AI stands at the cusp of transforming human potential, offering a future where creativity and efficiency are vastly amplified. However, the ethical shadows cast by deepfakes, algorithmic bias, and the potential for a tsunami of misinformation demand immediate and concerted attention. These are not merely technical glitches to be patched but fundamental threats to truth, fairness, and the fabric of democratic societies.

Navigating this complex landscape requires a delicate balance: fostering innovation while rigorously safeguarding against misuse. It calls for robust global collaboration, enlightened policy-making, responsible development practices, and an educated populace equipped to discern fact from fiction. The future of our information ecosystem and, indeed, the integrity of our shared reality, hinges on our collective ability to harness the power of generative AI for good, ensuring that its profound capabilities serve humanity’s best interests rather than undermining them. The time to act responsibly and proactively is now, as the choices we make today will define the ethical contours of tomorrow’s AI-powered world.